The AI Dependency Trap: Why I Left ChatGPT

I Was Trapped and Didn't Know It

I used ChatGPT for months — it wrote my scripts, held my context, and felt comfortable. The scripts were fluffy. I didn't notice, because I had nothing to compare them to.

When I switched to Claude, the quality difference was immediate. Tighter writing, better structure, fewer filler phrases. Like swapping a well-meaning intern for a senior editor.

The migration was painless — not because ChatGPT made it easy, but because I'd already built my tools as MCP servers running through n8n. My calendar access, note-taking workflows, event automation — none of it lived inside ChatGPT. The AI changed. The plumbing didn't.

The WordPress Lesson

I've run a web design firm for over 25 years — 200+ clients, almost all on WordPress. WordPress didn't win because it was the best CMS. It won because you could leave.

Export your site. Move hosts. Overnight if you had to. That single capability created an entire ecosystem. Hosts competed on quality instead of lock-in. Clients trusted the investment because they'd never be held hostage.

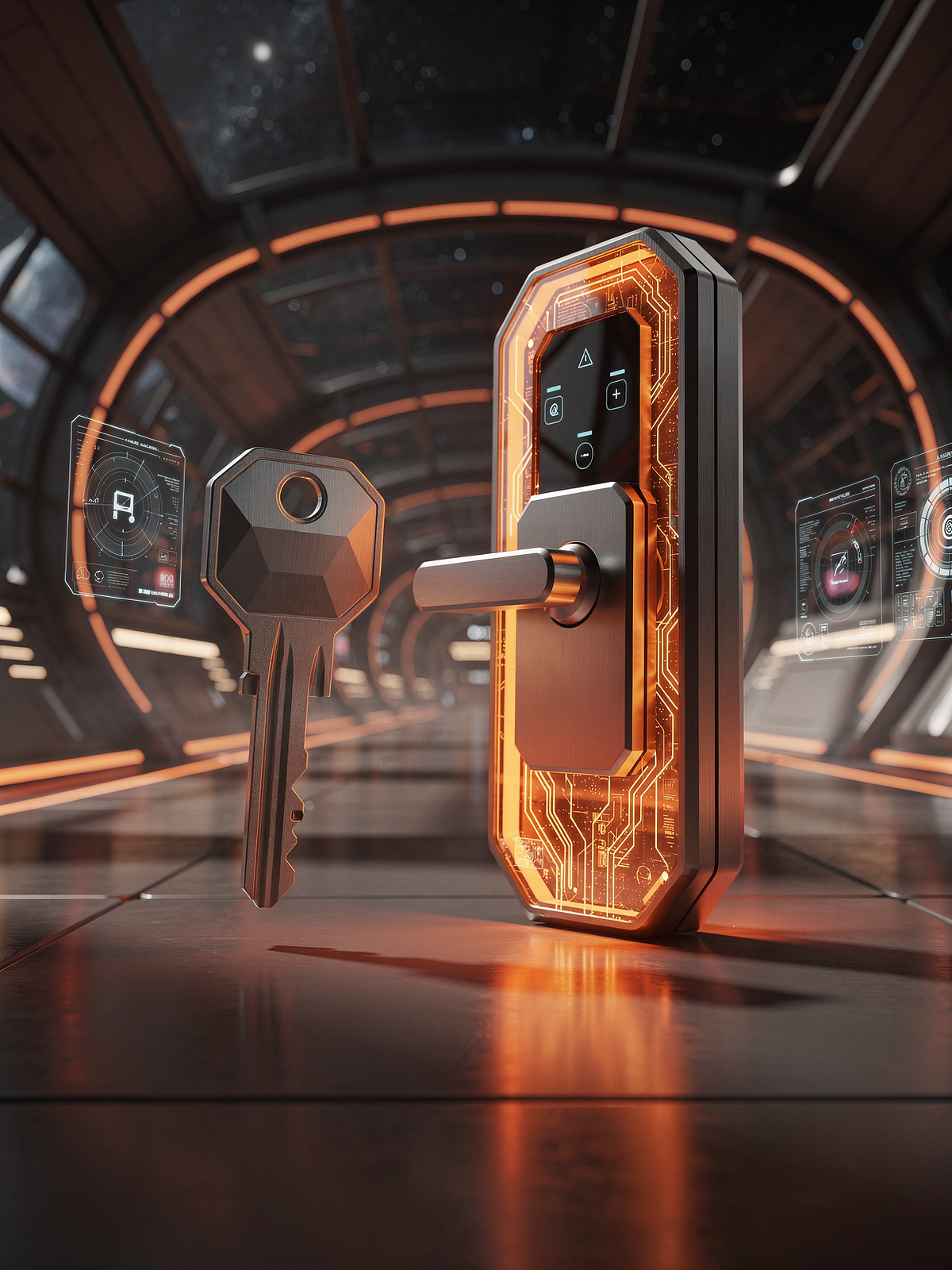

The Portability Principle — Own the Exit

The ability to walk away is what keeps vendors honest and keeps power with the client.

- Swap the model, keep the plumbing

- Proprietary connectors = lock-in

- Standard protocols = freedom

AI tooling is at exactly the same inflection point right now. If your tools are MCP servers you own, your AI is replaceable. If your tools are ChatGPT plugins, Claude Projects, or Gemini extensions — you're the one locked in.

This isn't theoretical. Earlier this year, Anthropic briefly restricted third-party tools from using Claude subscription tokens — breaking workflows for thousands of developers overnight. The ones who had portable tool setups through MCP barely noticed. The ones locked into Claude-specific integrations scrambled.

MCP — The Protocol That Makes This Possible

MCP — Model Context Protocol — is an open standard that lets any AI model call external tools through a single, universal interface. Instead of building custom integrations for every AI provider, you build once and connect anywhere.

I run all my tools through n8n's MCP implementation. My AI connects to n8n, n8n connects to everything else. Google Calendar, Obsidian, Slack — n8n handles all the OAuth on the backend. The AI never touches those tokens directly.

Without n8n

AI → OAuth to Google → OAuth to Slack → OAuth to Notion

Each connection is provider-specific. Change your AI, rebuild everything.

With n8n

AI → MCP (header auth) → n8n → handles all OAuth

One connection. Swap the AI anytime. Tools don't notice.

n8n actually has three distinct ways to work with MCP — a Gateway, a Trigger, and a Tool — each serving different purposes. I wrote a deep dive into how MCP works inside n8n if you want the technical details. For this post, what matters is the principle: your tools should outlive your AI provider.

What OpenClaw Made Obvious

OpenClaw is an open-source framework that turns an AI model into a persistent assistant — running on your machine, scheduling its own tasks, managing memory across sessions. It feels less like chatting with a model and more like having an employee who happens to be software.

OpenClaw is model-agnostic. The framework doesn't care if it's running Claude, GPT, Gemini, or local. The tools, memory, scheduling — all infrastructure you own. The model is just the brain you plug in.

Want to see it in action? Here's a raw video of me integrating MCP tools with Cortana, my OpenClaw assistant:

I change one line of config. My tools stay. My workflows stay. My data stays.

It's Happening On Your Phone Too

Apple's Shortcuts app is basically n8n for your phone. Local model works for small tasks but chokes on large text. The solution is the same layered approach:

📱 Phone

iOS Shortcuts → webhooks → n8n

🖥️ Desktop

OpenClaw → MCP → n8n

🖧 Server

Scheduled workflows → APIs → n8n

n8n is the constant. That's not theoretical. That's my Tuesday.

The Real Promise Isn't AGI

⚡ Local Token Pricing

Electricity, not API bills.

🔒 Full Privacy

Data never leaves your machine.

🏠 True Ownership

Your hardware, your workflows, your drives.

We don't need AGI. We can't even control the models we have now. What we need are local models we control.

What You Should Do Today

Portability is power. 25 years in web hosting. Same lesson.

How MCP Works in n8n

The technical deep dive — Gateway, Trigger, and Tool explained with GIFs and real workflows.

Read the MCP Guide →

The Enterprise Side

Vendor lock-in, procurement strategy, and enterprise AI architecture.

Read the Enterprise Post →